Karl Robinson

December 2, 2025

Karl is CEO and Co-Founder of Logicata – he’s an AWS Community Builder in the Cloud Operations category, and AWS Certified to Solutions Architect Professional level. Knowledgeable, informal, and approachable, Karl has founded, grown, and sold internet and cloud-hosting companies.

This year I did not make it to Las Vegas in person, but thankfully the Matt Garman AWS re:Invent 2025 keynote was live streamed for the first time into Fortnite! Perhaps I’m not the target market for that though, so I streamed it from my web browser. 4pm is also a much more sociable time to consume the keynote, rather than 8am with jet lag!

Matt started by telling us that the the AWS Cloud is growing – it is now made up of 28 Regions, 130 Availbility Zones, and this year alone they added 3.8GW of datacenter power capacity.

He went on to reiterate that Security is Priority 1 for AWS, which is the reason that customers such as US intelligence, Nasdaq & Pfizer run on AWS.

Matt mentioned AWS Partners mentioned in first 10 mins, followed by Unicorn Startups – more unicorns have built on AWS than any other cloud provider. This was followed up with an Audioshake case study – winner of last year’s Unicorn competition, who are using AWS to deliver advanced sound separation technology that isolates individual voices—even in noisy environments like health centres or media recordings. They’ve enabled use cases like restoring speech for ALS patients by separating and cloning original voice recordings before degradation. Running entirely on AWS—from inference and storage to orchestration—they’re making sound more customisable for humans and more understandable for machines.

Matt gave a heartfelt shoutout to developers, calling them the heart of AWS and the reason the platform exists. He reminded the audience that AWS was originally built to give every builder—whether in a garage or dorm room—the same access to infrastructure as the biggest companies. He emphasised that AWS is, and always has been, a learning conference built for developers, and called out AWS Heroes and the 1M+ strong user group community across 129 countries, including of course my home city of Brighton, with the AWS Brighton User Group!. His core message: AWS exists to give developers the freedom to invent—and that mission hasn’t changed in 20 years.

He went on to frame AI agents as the next major inflection point in the evolution of AI—moving beyond assistants that just respond, to agents that act on your behalf. He said agents are where companies are finally starting to see material business returns from their AI investments. According to him, this shift will have as much impact as the cloud or the internet, with billions of agents eventually operating across every company and industry.

He gave examples of agents already accelerating healthcare, streamlining payroll, and scaling individual productivity by 10x. His big takeaway: we’re at the start of the agentic era, and AWS is building the infrastructure, services, and runtime to power that future—safely, securely, and at scale.

Matt highlighted AWS’s long-standing partnership with NVIDIA, noting that AWS was the first cloud provider to offer NVIDIA GPUs and has spent 15+ years obsessing over how to run them at hyperscale. He emphasised that AWS delivers the most stable GPU clusters in the industry—down to debugging BIOS-level glitches others ignore—to support demanding AI workloads.

He also showcased the new P6e GB300 instances, powered by NVIDIA’s GB200 NVL72 systems, offering 20x the compute of previous-gen P5en. These are already being used by heavyweights like OpenAI and NVIDIA themselves to run massive training and inference jobs. The message was clear: if you’re serious about GPUs for AI, AWS is where you go when failure isn’t an option.

So, what were the new services and feature that Matt Garman was excited to announce? This year the focus was firmly on AI, as you will see:

Compute

1. P6e NVIDIA GPUs

AWS P6e GB300 instances, powered by NVIDIA’s latest GB200 NVL72, bring 20x more compute than the P5en and are built for hyperscale AI workloads without the flaky cluster behaviour that plagues everyone else. AWS sweats the hardware-software integration details—down to BIOS-level fixes—to deliver unmatched GPU reliability, and that’s why outfits like OpenAI and NVIDIA trust it for massive model training runs. If you’re building agents or wrangling trillion-token models, this is AWS saying: we’ve got the muscle, and it actually works.

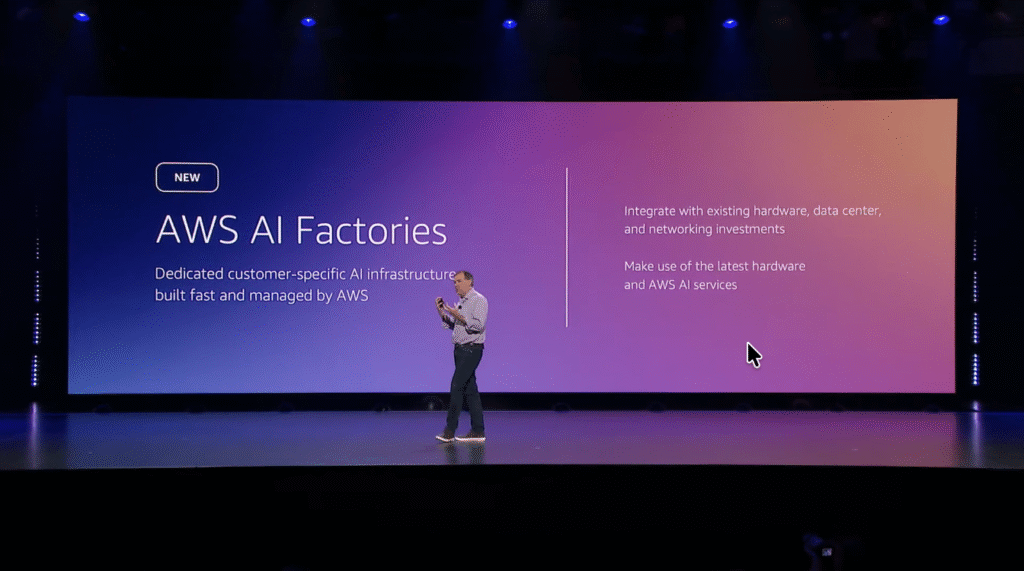

2. AWS AI Factories

AWS AI Factories let you drop hyperscale AI infrastructure directly into your own data centres—bringing EC2 Ultra servers, Trainium chips, and Bedrock services behind your firewall, under your control. It’s designed for enterprises and governments who need the full power of AWS AI with local compliance and zero shared tenancy, effectively giving you your own private AWS region tuned for generative workloads.

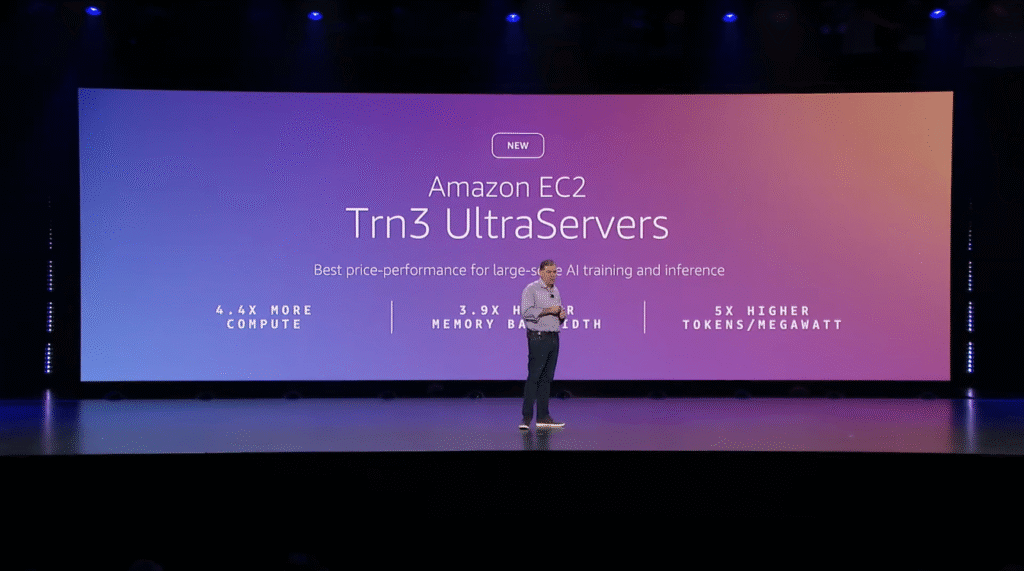

3. Trainium (Trn 3) Ultraservers now Generally Available

Trainium 3 Ultra servers are now generally available, offering 4.4x more compute, 3.9x more memory bandwidth, and 5x more AI tokens per megawatt than Trn2—delivering frontier-grade AI performance at a fraction of the power cost. With 144 Trainium 3 chips per instance and custom neuron switches tying it all together, this is AWS turning entire data centre racks into single-purpose AI supercomputers.

4. Trainium 4

Trainium 4 is already in the works and promises a 6x jump in compute, 4x more memory bandwidth, and double the high-bandwidth capacity over Trainium 3—designed to handle the next wave of frontier models that push past today’s architectural limits. It’s AWS building for a future where AI training needs aren’t just big—they’re absurd.

Inference

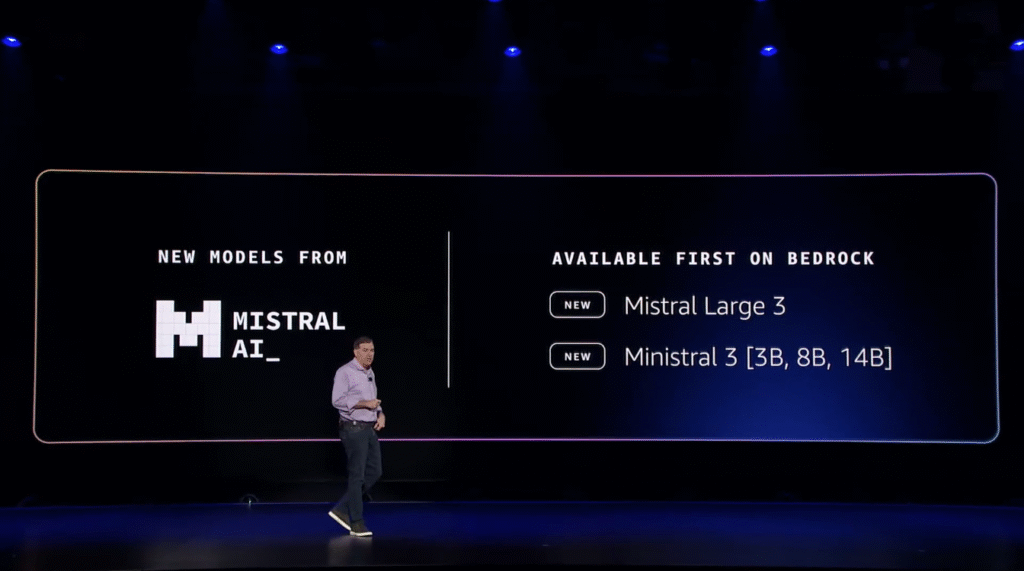

5. Mistral Large & Ministral 3 Open Weights Models available in Bedrock

Mistral Large and Ministral 3 are now live in Bedrock, giving developers high-performance open weights models with serious flexibility—from large-scale reasoning tasks to ultra-efficient edge deployments. It’s AWS doubling down on model choice so you’re not stuck with one-size-fits-none foundation models.

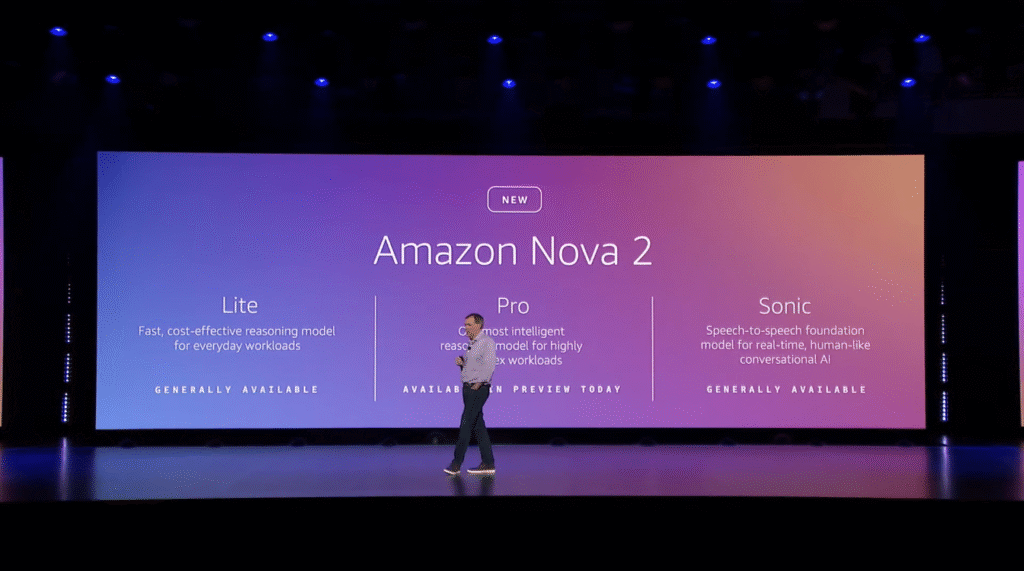

6. Amazon Nova 2

Amazon Nova 2 is AWS’s next-gen foundation model family, tuned for high performance and cost efficiency across a wide range of genAI workloads. Nova 2 Lite is the workhorse—fast, cost-effective, and strong on instruction following, tool use, code generation, and document extraction. It punches well above its weight against rivals like Claude Haiku and Gemini Flash.

Nova 2 Pro is built for complex reasoning and agentic workflows. In benchmark head-to-heads, it’s outperforming GPT-5.1, Gemini 3 Pro, and Claude Sonnet in areas like tool orchestration and intelligent decision-making. This is the model AWS wants you using when it really matters.

Then there’s Nova 2 Sonic—Amazon’s real-time speech-to-speech model. It handles multilingual, human-like conversations with low latency and is designed for voice agents that don’t sound like robots from the ’90s. Altogether, Nova 2 isn’t just a new model drop—it’s AWS staking a serious claim in the frontier model game.

7. Amazon Nova 2 Omni

Amazon Nova 2 Omni is a unified multimodal model that understands text, image, audio, and video inputs—then generates both text and images in response. It’s built for real-world complexity, like watching a keynote, understanding the slides and speech, and summarising it all for a sales team with visuals included. Instead of stitching together half a dozen tools, Omni does it all in one model—finally making true multimodal reasoning feel native, not hacked together.

8. Amazon Nova Forge

Amazon Nova Forge introduces “open training models,” letting you blend your proprietary data with AWS-curated datasets during model training—not just after. You start from a Nova checkpoint, inject your domain-specific knowledge mid-training, and end up with a custom model (a “Novella”) that understands your world without forgetting how to reason. It’s a game-changer for companies who’ve outgrown prompt engineering and want foundation-model power, but tailored to their business.

Sony Case Study – Amazon Nova Forge

Sony is using AWS to unify fan engagement across its massive entertainment portfolio—games, music, film, and anime—while also streamlining internal operations with generative AI. They’ve built a Sony Data Ocean on AWS that processes over 760TB of data from 500+ sources, fuelling everything from personalised fan experiences to creator insights. On the enterprise side, they’ve adopted Bedrock and Nova Forge to build custom LLMs that turbocharge compliance reviews and internal workflows, aiming for a 100x boost in efficiency. It’s a textbook example of turning legacy scale into AI-powered agility.

Agentic AI

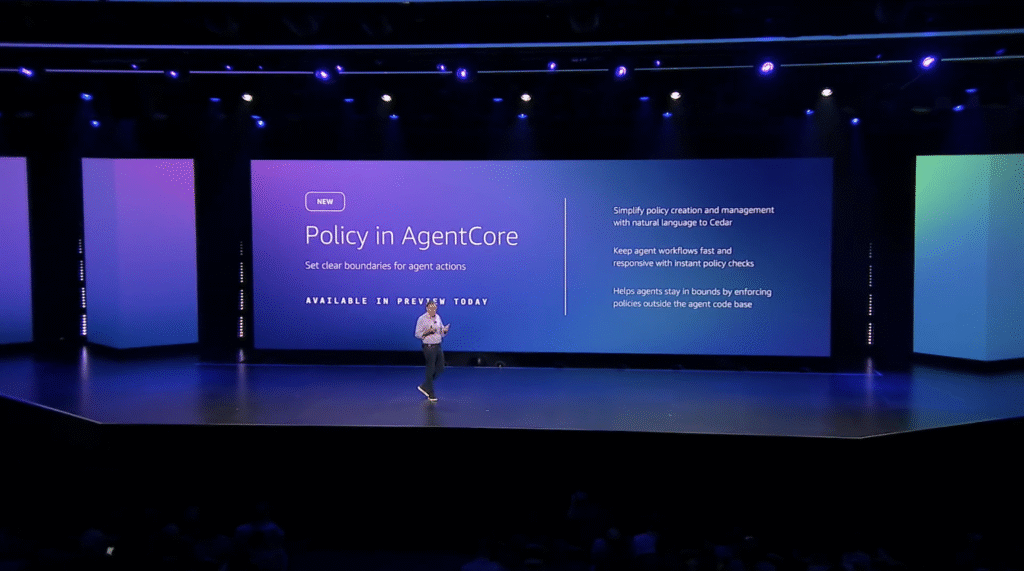

9. Policy in AgentCore

Policy in AgentCore gives you real-time, deterministic control over what your AI agents can do—with which tools, under what conditions, and to what extent. It enforces rules outside the agent’s code via a policy engine that intercepts every action before it touches your data or systems. Think of it like IAM for agents, but smarter and safer—no more hoping your prompt instructions will be followed.

10. AgentCore Evaluations

AgentCore Evaluations let you continuously monitor how well your agents are performing—beyond uptime and latency—by scoring them on things like correctness, helpfulness, and safety. It comes with 13 pre-built evaluators and supports custom metrics, giving you real-time insights into whether your agents are behaving as intended, even in production. It’s quality assurance for AI, baked into your stack.

Adobe Case Study

Shantanu Narayen, Adobe’s CEO, laid out how the company is embedding agents across its entire ecosystem using AgentCore. From creative workflows in Firefly to document intelligence in Acrobat and marketing orchestration in Experience Cloud, Adobe is going agentic at scale. They’ve built custom agents for tasks like compatibility checks in Adobe Commerce and conversational assistants in Acrobat Studio—all secured and managed via AgentCore’s runtime, identity, and observability features. It’s Adobe moving beyond AI features into full-scale automation, powered by AWS.

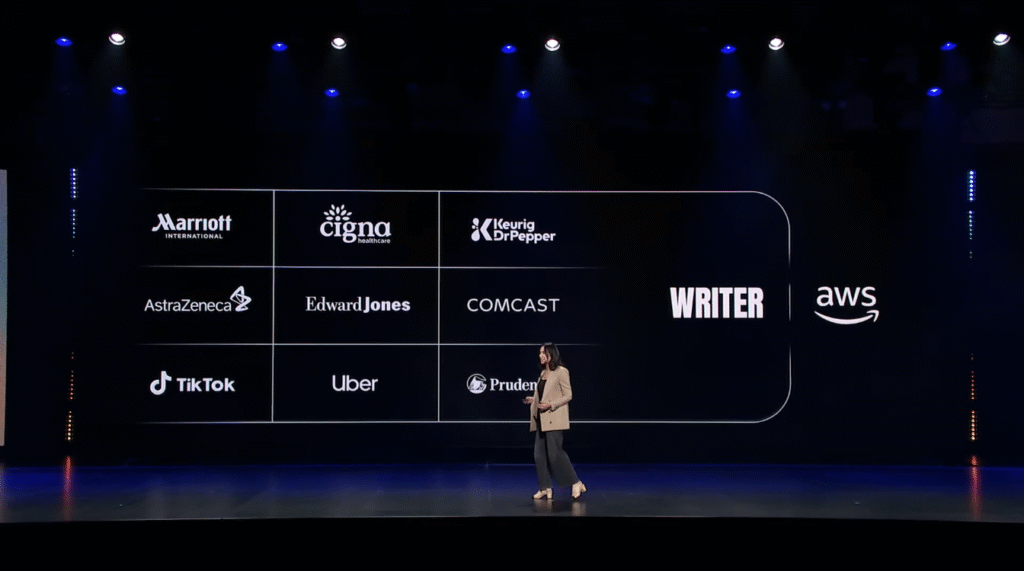

Writer Case Study

May Habib, CEO of Writer, shared how they’ve built a full-stack, enterprise-grade agent platform running on AWS—complete with their own Palmyra LLMs trained using SageMaker HyperPod and P6 instances. Writer’s platform enables non-technical users to automate complex workflows through reusable “playbooks” that connect tools, data, and systems. With new self-evolving agents and built-in supervision, they’re helping enterprise teams move faster while staying compliant—integrating directly with Bedrock guardrails and models to give IT leaders control without killing innovation.

Modernization

Modernization got a major boost with AWS Transform, which is now expanding beyond mainframes to support custom code migrations across virtually anything—Angular to React, VBA to Python, Bash to Rust, you name it. AWS launched Transform Custom, giving teams the power to build their own code transformation agents that handle outdated libraries, proprietary scripts, and legacy stacks without months of manual refactoring. It’s aimed squarely at developers drowning in technical debt who need a faster, safer route to modern architectures.

In the real world, companies like QAD are already seeing dramatic time savings—cutting migration efforts from weeks to days. This isn’t just another code converter; it’s agentic infrastructure remediation at enterprise scale. For teams battling brittle legacy code and trying to move faster without rewriting the past by hand, Transform is AWS saying: we’ll help you blow up the old rack and ship clean code instead.

Kiro

Kiro is AWS’s structured AI development environment that brings order to the chaos of prompt-based coding. It helps developers move from vague ideas to fully specced features with clear, step-by-step collaboration. Instead of guessing what you want, Kiro asks smart clarifying questions, understands your repo context, and generates clean, usable code aligned with your standards.

It’s designed for teams building serious systems—not toy apps—with support for complex features, large codebases, and integrated tools like GitHub, Jira, and Slack. Developers stay in control while Kiro handles the boilerplate, freeing them up to focus on logic, architecture, and delivery. Think of it as structured AI pair programming that actually respects your stack.

Frontier Agents

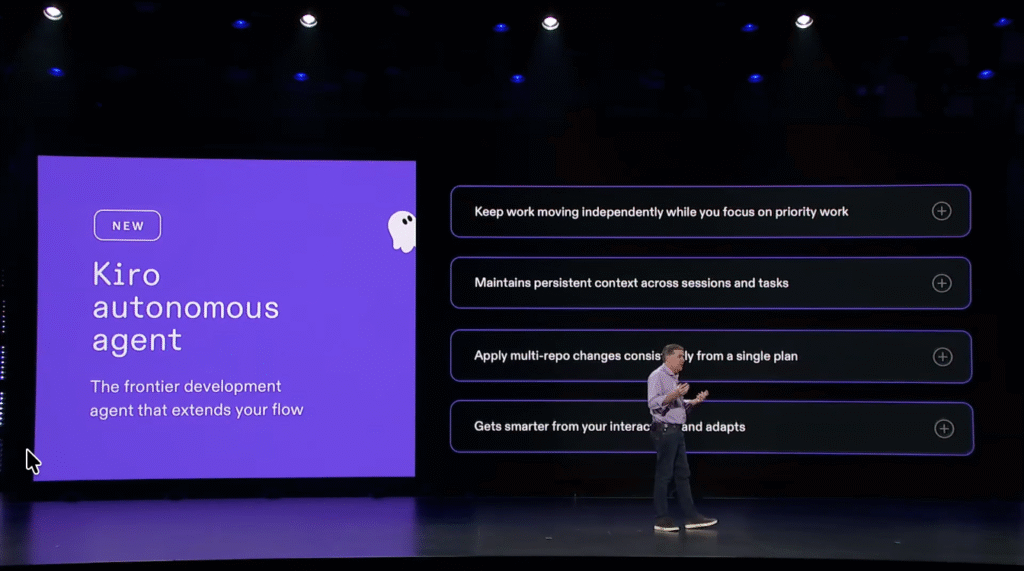

11. Kiro Autonomous Agent

The Kiro Autonomous Agent takes things further by acting like an extra team member that can handle real development tasks independently—no constant babysitting required. You give it a goal, and it figures out the steps, updates the codebase across repos, writes tests, opens pull requests, and keeps going without needing hand-holding every five minutes.

It’s designed for long-running, large-scale work—like upgrading libraries across microservices or implementing features that span multiple systems. It learns from your patterns, works alongside your tools, and can even run while you sleep. This isn’t just automation—it’s delegation, done safely, within your team’s workflow.

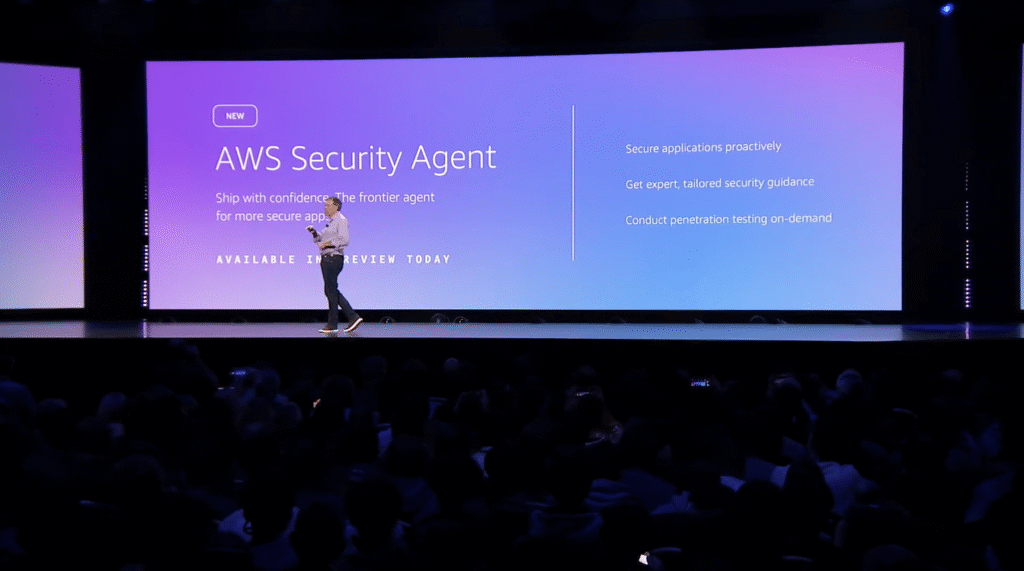

12. AWS Security Agent

The AWS Security Agent is a specialised agent built on AgentCore that helps internal security teams enforce governance at scale. It reviews code, flags violations, suggests remediations, and integrates with existing pipelines to act as an always-on compliance assistant. It’s AWS using its own tools to keep policy, security, and developer velocity aligned—proving that agentic security doesn’t have to slow you down, it can actually help you move faster without breaking things.

13. AWS DevOps Agent

The AWS DevOps Agent automates everyday ops tasks like environment provisioning, CI/CD updates, config validation, and rollback orchestration—all built on top of AgentCore. It plugs into your toolchain, understands your deployment patterns, and can act autonomously or alongside your team to keep things running smoothly. It’s like giving your DevOps team an extra pair of hands that never sleeps and never forgets the runbook.

25 launches in 10 minutes!

After spending 2 hours speaking exclusively about AI, Matt was challenged to do an entire keynote’s worth of announcements about the rest of the AWS business in 10 minutes, against a basketball style shot clock! I only counted 23, but it was quick fire, so maybe I missed a couple. Here is what I caught:

Compute

14. X8i Memory-Optimised Instances

New X family instances powered by custom Intel Xeon 6 chips deliver up to 50% more memory—perfect for memory-hungry workloads like SAP HANA and SQL Server.

15. Next-gen AMD EPYC Memory Instances

AWS rolled out AMD-based memory instances with 3TB of RAM, giving teams more options for big-memory applications without breaking the budget.

16 C8a Instances

For CPU-heavy workloads like fast processing and gaming, the new C8a instances offer 30% more performance using the latest AMD EPYC processors.

17 C8INE instances

C8INE instances combine Intel Xeon 6 and Nitro V6 to deliver 2.5x better packet performance per vCPU—tailored for security and network-heavy apps.

18 M8AZN instances

M8 AZN brings the highest single-threaded clock speed in the cloud, ideal for latency-sensitive workloads like gaming, real-time data, and high-frequency trading.

19 & 20. EC2M3 Ultra Mac + EC2M4 Max Mac

Two new EC2 instances with the latest Apple silicon give devs a native environment for building, testing, and signing macOS and iOS apps—without leaving AWS.

21. Lambda Durable Functions

Lambda now supports long-running, stateful functions—great for workflows that need to wait on agents or process for hours or even days, with built-in retry logic.

Storage

22. 50TB Object Size in S3

S3’s maximum object size has increased 10x—from 5TB to 50TB—so you can store giant datasets, high-res media, and model checkpoints without slicing them up.

23. 10x Faster S3 Batch Operations

Batch operations in S3 now run 10x faster, speeding up data movement, tagging, and transformation at petabyte scale.

24. Intelligent Tiering for S3 Tables

S3 Tables now support intelligent tiering, automatically reducing storage costs by up to 80% as your Iceberg datasets grow.

25. S3 Table Replication Across Regions

You can now replicate S3 tables across regions and accounts, enabling globally consistent query performance with no extra tooling.

Security

26. S3 Access Point for NetApp ONTAP

AWS expanded S3 Access Points for FSx to include NetApp ONTAP—letting ONTAP customers access file system data as if it were S3-native.

27. S3 Vectors Now GA

S3 Vectors, the first cloud object store with native vector support, is now generally available—store and query trillions of embeddings with 90% lower cost.

28. GPU Acceleration for OpenSearch Vector Indexing

OpenSearch now supports GPU-accelerated vector indexing, cutting index time by 10x and cost by 75%.

29. EMR Serverless Clusters no longer need local storage

EMR Serverless just got simpler—clusters no longer require provisioning local storage, removing one of the last bits of “muck” in running big data jobs at scale. It’s now truly serverless: no infrastructure to manage, just code, data, and results.

Security

30. GuardDuty Support for ECS

GuardDuty’s advanced threat detection now works with ECS, extending automated security coverage to containerised workloads.

31. Security Hub General Availability

Security Hub is now GA, with new features like real-time risk analytics, a trends dashboard, and cleaner pricing.

32. Unified CloudWatch Log Store

CloudWatch adds a new unified data store to pull logs from AWS services and third parties like Okta and CrowdStrike—centralised, searchable, and analytics-ready.

Databases

33. RDS Storage Expansion (SQL/Oracle)

RDS now supports up to 256TB for SQL Server and Oracle databases—a 4x increase in capacity and IO throughput.

34. vCPU Configuration for SQL Server Licensing

You can now configure vCPU counts on RDS SQL Server to optimise Microsoft licensing costs, giving you more control over spend.

35. SQL Server Developer Edition Support

RDS now supports SQL Server Developer Edition at no cost, making it easier to build and test apps without burning a licence.

36. Database Savings Plans

AWS introduced Database Savings Plans, offering up to 35% off database workloads across engines—finally, unified pricing predictability for DBs.

Summary

So there you have it – 36 announcements over 2 hours and 10 minutes certainly kept me on my toes, and there will be plenty more to follow throughout re:Invent 2025.

AWS didn’t just drop features at re:Invent—they delivered a full-stack declaration that the age of agents, frontier models, and hyperscale infrastructure is here, and it’s production-ready. From silicon to savings plans, from open models to custom runtimes, this was AWS showing its cards: if you’re building the future, they want to be the platform that quietly makes it all work.

It’s clear that AWS is pivoting to be seen as more of an AI business than an IT infrastructure business, but we still got some exciting infrastructure announcements, even if they did feel a little rushed! The best news for Logicata AWS Managed Services customers will likely be Database Savings Plans – we’ve been waiting on these for a while.

I may have to rethink my blog post format for 2026…